Recurrent Neural Networks

Published on

Continuing my quest for different dataset analyses and learning basic deep learning techniques, I decided to use some variants of Recurrent Neural Networks (RNNs) and Long Short Term Memories (LSTMs) to compute the accuracy of predicting different articles of clothing.

There are a lot of great resources on the web that have great explanations and visualisations of RNNs and LSTMs. I think a great summary of the methods is a set of processes that allow to retain information from a sequences and their patterns within. The latter methods had processes that incorporated information to retain long-term or more recent information.

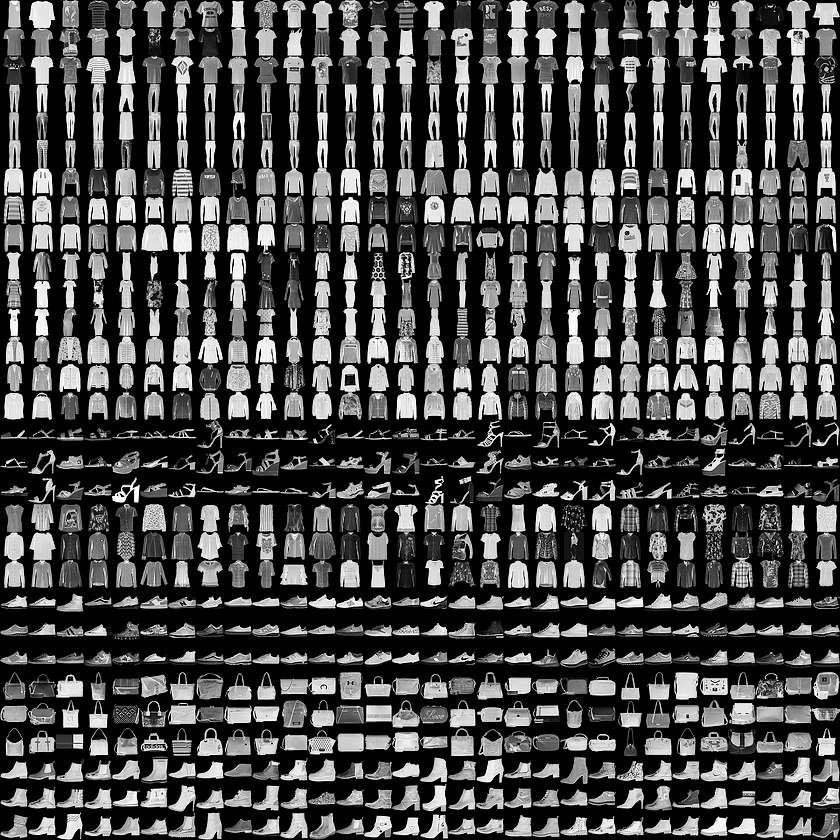

I anaylsed the Fashion-MNIST dataset, looked at how the dataset can be fed into a RNNs and LSTMs to train and predict the type of clothing. I decided to look at both techniques to compare the differences and physically see how LSTMs improve on the RNN framework.

Here is some code:

class RNNModel(nn.Module):

def __init__(self, input_dim, hidden_dim, layer_dim, output_dim):

super(RNNModel, self).__init__()

# Hidden Dimensions

self.hidden_dim = hidden_dim

# Number of Hidden Layers

self.layer_dim = layer_dim

# Building your RNN

# batch_first = True causes input/output tensors to be of shape

# (batch_dim, seq_dim, input_dim)

# batch_dim = number of samples per batch

self.rnn = nn.RNN(input_dim, hidden_dim, layer_dim, batch_first=True, nonlinearity='relu')

# Readout layer

self.fc = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

# Initialize hidden state with zeros

# (layer_dim, batch_size, hidden_dim)

h0 = torch.zeros(self.layer_dim, x.size(0), self.hidden_dim).requires_grad_()

# We need to detach the hidden state to prevent exploding/vanishing gradients

# This is part of the truncated backpropagation through time (BPTT)

out, hn = self.rnn(x, h0.detach())

# Index hidden state of last time step

# out.size() --> 100, 28, 10

# out[:, -1, :] --> 100, 10 --> just want last time step hidden states!

out = self.fc(out[:, -1, :])

# out.size() --> 100, 10

return out After conducting experiments with four different models (two RNN and two LSTM models with different parameters), the single-layer LSTM model worked best.

After conducting experiments with four different models (two RNN and two LSTM models with different parameters), the single-layer LSTM model worked best.

To read more about this analysis, please visit my repository.

Alternatively, you could check out other sources such as:

- https://colah.github.io/posts/2015-08-Understanding-LSTMs/

- https://towardsdatascience.com/animated-rnn-lstm-and-gru-ef124d06cf45

This work is licensed under a Creative Commons Attribuition-ShareAlike 4.0 International License .