Convolutional Neural Networks

Published on

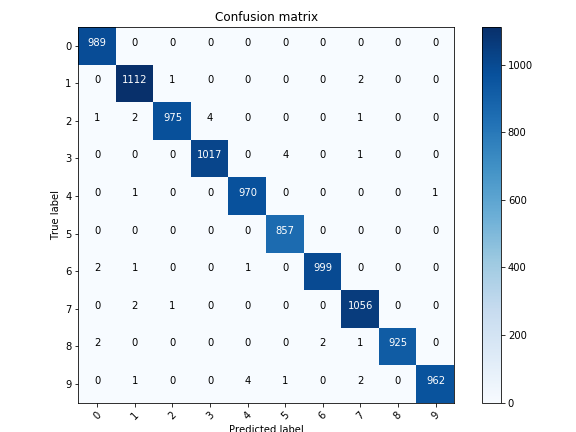

With the help of Deep Learning Wizard, I constructed a baseline Convolutional Neural Network to get a feel of what the model is doing. Just to pick a different kind of dataset, I opted to study the QMNIST dataset, a variant of the MNIST with more documentation on how the data was collected and labelled. In summary, this dataset has a series of 120,000 images of hand-drawn digits between 0-9. The goal is to input the pixels of the image into the neural network and to predict the value of the number.

I have provided some of the code used to parameterise the dataset as well as the prediction results.

class ConvNet(nn.Module):

def __init__(self, input_size=784, hidden_size=500, num_classes=10):

super(ConvNet, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(1, 16, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(16),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2))

self.layer2 = nn.Sequential(

nn.Conv2d(16, 32, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2))

self.fc = nn.Linear(7*7*32, num_classes)

def forward(self,x):

out = self.layer1(x)

out = self.layer2(out)

out = out.reshape(out.size(0), -1)

out = self.fc(out)

return out

model = ConvNet(num_classes).to(device)

# Loss and Optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

To read more about the analysis, please visit my repository.

This work is licensed under a Creative Commons Attribuition-ShareAlike 4.0 International License .